model expected the shape of dimension 0 to be between 1 and 1 but received 32

See original GitHub issueI want to use dynamic batching in triton plan model. I use pytorch hub resnet50 pretrained model and convert to onnx.

Model input and output information

Then I use trtexec to convert onnx model into tensorrt.

trtexec --onnx=resnet50_onnx_bs.onnx \

--saveEngine=model.plan \

--explicitBatch \

--minShapes=input:1x3x224x224 \

--optShapes=input:8x3x224x224 \

--maxShapes=input:32x3x224x224

In order to deploy model at triton server, I follow the instructions and directory is

Config file

Start triton server

Client code

import time

import numpy as np

import tritonclient.http as httpclient

from PIL import Image

if __name__ == "__main__":

triton_client = httpclient.InferenceServerClient(url=127.0.0.1:8000')

image = Image.open('./dog.jpg')

image = image.resize((224, 224), Image.ANTIALIAS)

image = np.asarray(image)

image = image / 255

image = np.expand_dims(image, axis=0)

image = np.array(np.repeat(image, 32, axis=0), dtype=np.float32)

image = np.transpose(image, axes=[0, 3, 1, 2])

image = image.astype(np.float32)

print("input shape: ", image.shape) # 32,3,224,224

inputs = []

inputs.append(httpclient.InferInput('input', image.shape, "FP32"))

inputs[0].set_data_from_numpy(image, binary_data=False)

outputs = []

outputs.append(httpclient.InferRequestedOutput('output', binary_data=False))

results = triton_client.infer('trt-resnet50', inputs=inputs, outputs=outputs)

Unfortunately, I get error information.

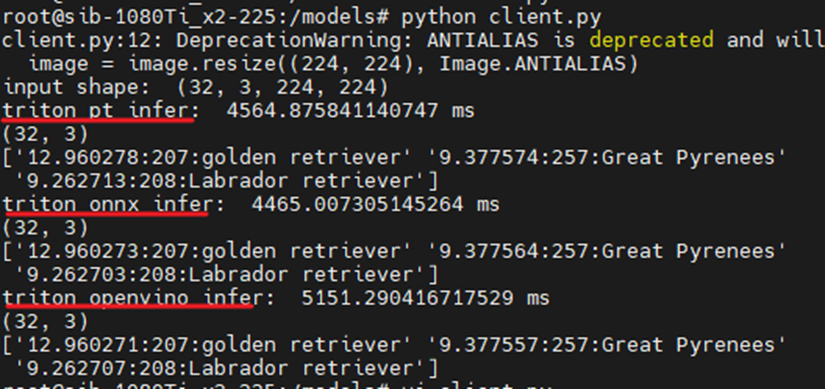

root@sib-1080Ti_x2-225:/models# python client.py

client.py:12: DeprecationWarning: ANTIALIAS is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.LANCZOS instead.

image = image.resize((224, 224), Image.ANTIALIAS)

input shape: (32, 3, 224, 224)

Traceback (most recent call last):

File "client.py", line 58, in <module>

results = triton_client.infer('trt-resnet50', inputs=inputs, outputs=outputs)

File "/usr/local/lib/python3.8/dist-packages/tritonclient/http/__init__.py", line 1372, in infer

_raise_if_error(response)

File "/usr/local/lib/python3.8/dist-packages/tritonclient/http/__init__.py", line 64, in _raise_if_error

raise error

tritonclient.utils.InferenceServerException: request specifies invalid shape for input 'input' for trt-resnet50. Error details: model expected the shape of dimension 0 to be between 1 and 1 but received 32

I use similar configuration for pytorch/onnx/openvino, but they can output result successfully. My questions are

- Does tensorrt support dynamic batch and how to solve above error.

- Can I set “execution_accelerators” in onnx config as an alternative. Something like this.

optimization { execution_accelerators {

gpu_execution_accelerator : [ {

name : "tensorrt"

parameters { key: "precision_mode" value: "FP16" }

parameters { key: "max_workspace_size_bytes" value: "1073741824" }

}]

}}

Issue Analytics

- State:

- Created a year ago

- Comments:11 (7 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Error details: model expected the shape of dimension 0 to be ...

i tried to reshape to [ 1, 5, 3 , 480, 640] and the error between 1 and 1 but received 5 is...

Read more >python - incompatible shape on image segmentation task

I'm using U-Net defined in Python library with Neural Networks for Image Segmentation but when I try to run model.fit i get this...

Read more >Triton Inference Engine Tensorflow Model Configuration ...

The model is expected to output [-1, 16] tensor. The -1 is a dynamic value that is determined based on the number of...

Read more >NumPy quickstart — NumPy v1.25.dev0 Manual

Understand the difference between one-, two- and n-dimensional arrays in NumPy;. Understand how to apply some linear algebra operations to n-dimensional arrays ...

Read more >Introduction to Tensors | TensorFlow Core

Shape : The length (number of elements) of each of the axes of a tensor. Rank: Number of tensor axes. A scalar has...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Thanks for replying. I try the belowing command

Then I use the same client code, but still get dimension error

(By the way, I don’t know how cc someone. Do you mean @ someone?)

There are still questions bothering me.

I didn’t test on tensorrt because of dimension error but it works for torchScript/tf/onnx.

Closing as the original issue is resolved.