bestmodel.pth size shrank while it was being uploaded

See original GitHub issuewandb --version && python --version && uname

- Weights and Biases version: 0.8.25

- Python version: 3.7.6

- Operating System: Linux

Description

While running a fastai callback to train a model in jupyter notebook by doing something like:

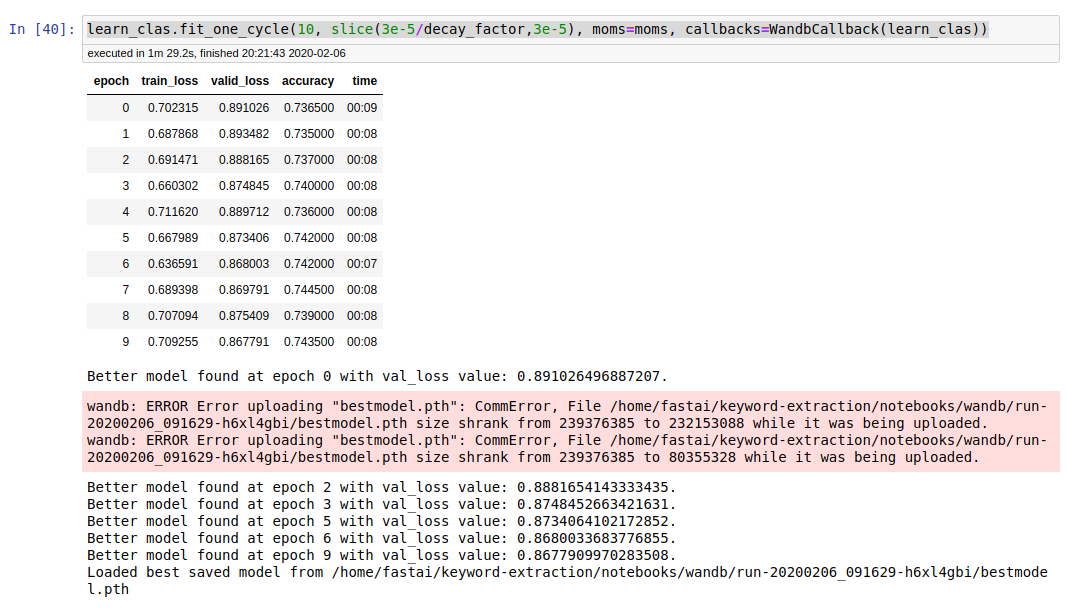

learn_clas.fit_one_cycle(10, slice(2e-3/decay_factor,2e-3), moms=moms, callbacks=WandbCallback(learn_clas))

#... do some other stuff

learn_clas.fit_one_cycle(10, slice(3e-5/decay_factor,3e-5), moms=moms, callbacks=WandbCallback(learn_clas))

In the results that get written out to the notebook I often see errors such as:

Here’s the text of the error message.

wandb: ERROR Error uploading "bestmodel.pth": CommError, File /home/fastai/keyword-extraction/notebooks/wandb/run-20200206_091629-h6xl4gbi/bestmodel.pth size shrank from 239376385 to 232153088 while it was being uploaded.

wandb: ERROR Error uploading "bestmodel.pth": CommError, File /home/fastai/keyword-extraction/notebooks/wandb/run-20200206_091629-h6xl4gbi/bestmodel.pth size shrank from 239376385 to 80355328 while it was being uploaded.

It would appear that either there’s some kind of race condition - or that the file is being overwritten by the second training loop while still being uploaded by the first.

Update:

It’s also happening with lots of other files, eg:

wandb: ERROR Error uploading "___batch_archive_1.tgz": CommError, File /tmp/tmpnn7k_ppewandb/___batch_archive_1.tgz size shrank from 416086 to 301398 while it was being uploaded.

Exception in thread Thread-78:

Traceback (most recent call last):

File "/home/fastai/anaconda3/envs/keyword-extraction/lib/python3.7/threading.py", line 917, in _bootstrap_inner

self.run()

File "/home/fastai/anaconda3/envs/keyword-extraction/lib/python3.7/site-packages/wandb/file_pusher.py", line 85, in run

self.cleanup_file()

File "/home/fastai/anaconda3/envs/keyword-extraction/lib/python3.7/site-packages/wandb/file_pusher.py", line 154, in cleanup_file

os.unlink(self.tgz_path)

FileNotFoundError: [Errno 2] No such file or directory: '/tmp/tmpnn7k_ppewandb/batch-1.tgz'

Issue Analytics

- State:

- Created 4 years ago

- Comments:15 (7 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Machine Learning - KX Insights - Kx Systems

This includes nodes to perform the modeling itself and nodes to preprocess and ... Buffer Size, Number of records to observe before fitting...

Read more >There is a shrink in my Access Database size

Occasionally, when my employer wants to add data into the database, I share it for him on Share files. He will upload data...

Read more >Introducing Generalized PathSeeker® (GPS) - Minitab

Here we turn to a hands on tour of getting starting with GPS and review the essential ... Squared Error (MSE) indexed by...

Read more >IBM® SPSS® Amos™ 28 User's Guide

This edition applies to IBM® SPSS® Amos™ 28 and to all subsequent releases and ... Amos will shrink your path diagram to fit...

Read more >A fast and scalable framework for large-scale and ... - PLOS

The UK Biobank is a very large, prospective population-based cohort study across the United Kingdom. It provides unprecedented opportunities ...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

@borisdayma I just now switched from using

optunatorayand I am not experiencing any issues like this one anymore.Im tunning transformers 3.5.0 now but was experiencing the above issue before using transformers 3.4.0 (optuna again).

Hey guys, can you try our new client library. We’re about a week away from official release, but the errors you’re seeing should be fixed. You can install with:

pip install wandb -U --pre