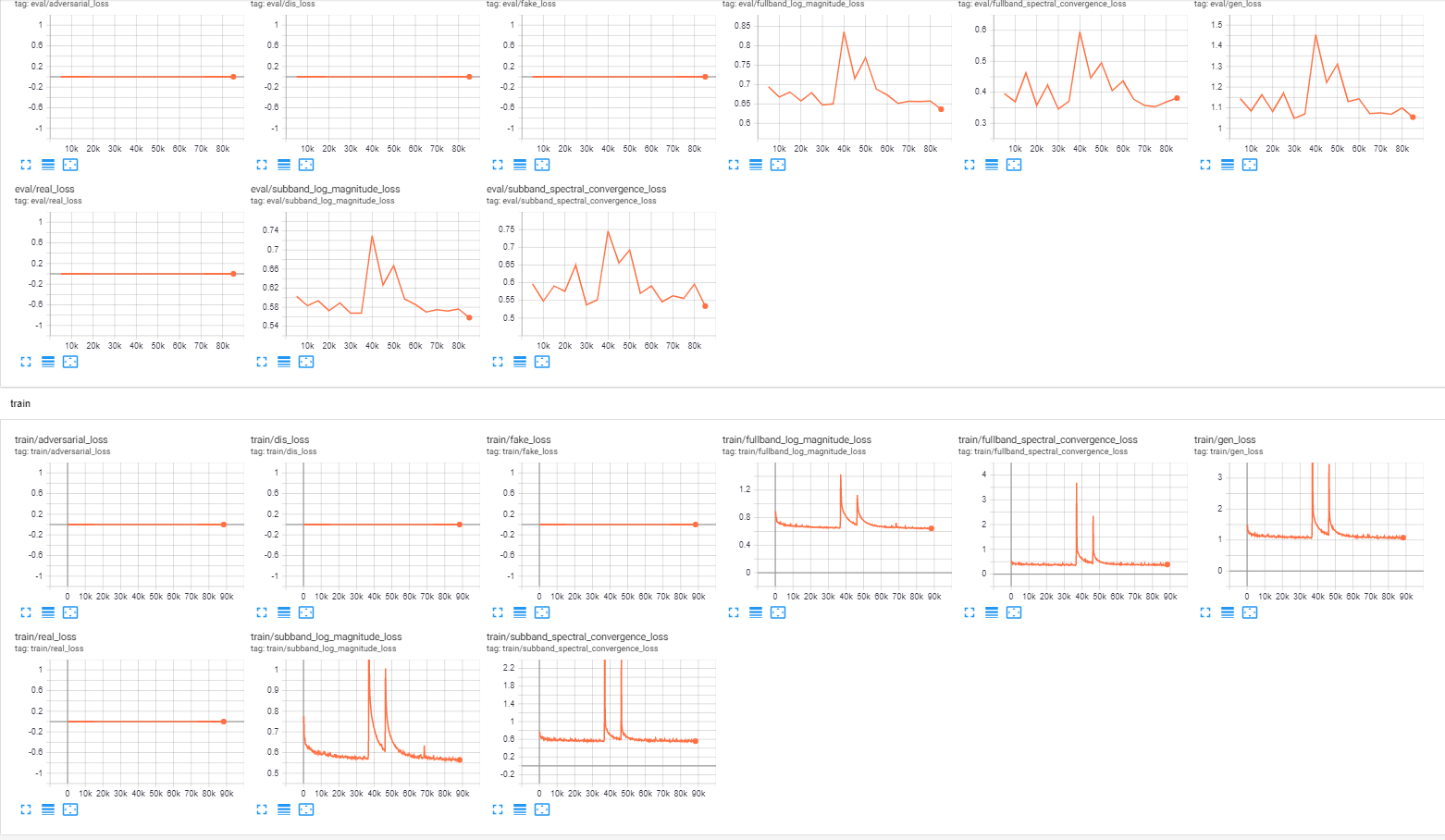

MB-MelGAN fine-tuning has big loss spikes, loss does not improve

See original GitHub issueHi,

I am trying to fine-tune the pretrained multiband_melgan.v1_24k model on LibriTTS + my speaker.

I’m aware that MB-MelGAN requires a lot more steps, but I am making this because my Tensorboard curve looks very unusual with spikes in loss, and no improvement in loss.

I am training on a machine with Xeon E5-2623 v4 + 4x1080ti + 128GB RAM, so memory should not be an issue this time. (on this topic, should I be seeing just 4it/s on this hardware?)

Attempt 1:

(These logs are from another training session, but the same problems happen)

2020-11-10 02:02:00,529 (base_trainer:566) INFO: (Step: 2400) train_subband_spectral_convergence_loss = 0.6038.

2020-11-10 02:02:00,534 (base_trainer:566) INFO: (Step: 2400) train_subband_log_magnitude_loss = 0.6201.

2020-11-10 02:02:00,538 (base_trainer:566) INFO: (Step: 2400) train_fullband_spectral_convergence_loss = 0.4016.

2020-11-10 02:02:00,543 (base_trainer:566) INFO: (Step: 2400) train_fullband_log_magnitude_loss = 0.7134.

2020-11-10 02:02:00,548 (base_trainer:566) INFO: (Step: 2400) train_gen_loss = 1.1694.

2020-11-10 02:02:00,552 (base_trainer:566) INFO: (Step: 2400) train_real_loss = 0.0000.

2020-11-10 02:02:00,557 (base_trainer:566) INFO: (Step: 2400) train_fake_loss = 0.0000.

2020-11-10 02:02:00,562 (base_trainer:566) INFO: (Step: 2400) train_dis_loss = 0.0000.

^M[train]: 0%| | 2401/4000000 [10:32<292:02:52, 3.80it/s]^M[train]: 0%| | 2402/4000000 [10:33<269:08:44, 4.13it/s]^M[train]: 0%| | 2403/4000000 [10:33<265:15:08, 4.19it/s]^M[train]: 0%| | 2$

^M[train]: 0%| | 2521/4000000 [10:58<658:17:57, 1.69it/s]^M[train]: 0%| | 2522/4000000 [10:58<519:10:25, 2.14it/s]^M[train]: 0%| | 2523/4000000 [10:58<424:09:18, 2.62it/s]^M[train]: 0%| | 2$

2020-11-10 02:02:41,702 (base_trainer:566) INFO: (Step: 2600) train_subband_spectral_convergence_loss = 9.2314.

2020-11-10 02:02:41,706 (base_trainer:566) INFO: (Step: 2600) train_subband_log_magnitude_loss = 1.1278.

2020-11-10 02:02:41,711 (base_trainer:566) INFO: (Step: 2600) train_fullband_spectral_convergence_loss = 2.4215.

2020-11-10 02:02:41,716 (base_trainer:566) INFO: (Step: 2600) train_fullband_log_magnitude_loss = 1.2601.

2020-11-10 02:02:41,720 (base_trainer:566) INFO: (Step: 2600) train_gen_loss = 7.0204.

2020-11-10 02:02:41,725 (base_trainer:566) INFO: (Step: 2600) train_real_loss = 0.0000.

2020-11-10 02:02:41,730 (base_trainer:566) INFO: (Step: 2600) train_fake_loss = 0.0000.

2020-11-10 02:02:41,734 (base_trainer:566) INFO: (Step: 2600) train_dis_loss = 0.0000.

^M[train]: 0%| | 2601/4000000 [11:14<253:23:40, 4.38it/s]^M[train]: 0%| | 2602/4000000 [11:14<244:25:47, 4.54it/s]^M[train]: 0%| | 2603/4000000 [11:14<229:49:07, 4.83it/s]^M[train]: 0%| | 2$

2020-11-10 02:03:19,283 (base_trainer:566) INFO: (Step: 2800) train_subband_spectral_convergence_loss = 1.1394.

2020-11-10 02:03:19,288 (base_trainer:566) INFO: (Step: 2800) train_subband_log_magnitude_loss = 1.0550.

2020-11-10 02:03:19,294 (base_trainer:566) INFO: (Step: 2800) train_fullband_spectral_convergence_loss = 0.9998.

2020-11-10 02:03:19,299 (base_trainer:566) INFO: (Step: 2800) train_fullband_log_magnitude_loss = 1.1900.

2020-11-10 02:03:19,304 (base_trainer:566) INFO: (Step: 2800) train_gen_loss = 2.1921.

2020-11-10 02:03:19,310 (base_trainer:566) INFO: (Step: 2800) train_real_loss = 0.0000.

2020-11-10 02:03:19,315 (base_trainer:566) INFO: (Step: 2800) train_fake_loss = 0.0000.

2020-11-10 02:03:19,320 (base_trainer:566) INFO: (Step: 2800) train_dis_loss = 0.0000.

Is this normal behaviour?

Issue Analytics

- State:

- Created 3 years ago

- Comments:5 (3 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Extremely large spike in training loss that destroys training ...

Note that the loss itself also doesn't seem to decrease even before the spike; however visually the result seems to be improving. Not...

Read more >Loss not changing when training · Issue #2711 · keras-team ...

I use your network on cifar10 data, loss does not decrease but increase. With activation, it can learn something basic. Network is too...

Read more >Why did loss and acc fluctuated in spikes in training?

I train a LSTM network, it's not fluctuate all over, but spiking in several place. I've tried to adjust the learning rate. Is...

Read more >Loss behaviour for bert fine-tuning on QNLI - Models

We can see that the training loss is increasing before dropping between each ... Also there are “spikes” appearing at the end of...

Read more >Fine-tuning with TensorFlow - YouTube

Let's fine-tune a Transformers models in TensorFlow, using Keras.This video is part of the Hugging Face course: ...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

@OscarVanL it’s normal 😃). After 200k, the model will stop and you need re-sume it then it will training both Generator and Discriminator, it should train around 1M steps to get best performance.

@aragorntheking

Yes it did, in particular, it reduced the buzzing/noise in the background.

I did not restrict any layers, I just started the training job over the pretrained

multiband_melgan.v1_24kvocoder.I did train with all speakers, not just my 1 voice. It did improve performance in general.

I think with just one voice it’s likely to overfit (obviously, this depends on how much speech you have for that speaker), hence why all speakers performed better for me.