cross encoder fp16 amp training: use_amp=True didn't work

See original GitHub issueI encountered a problem when I tried to use amp in cross encoder for fp16 training.

My torch version==1.7.0, python==3.7 and

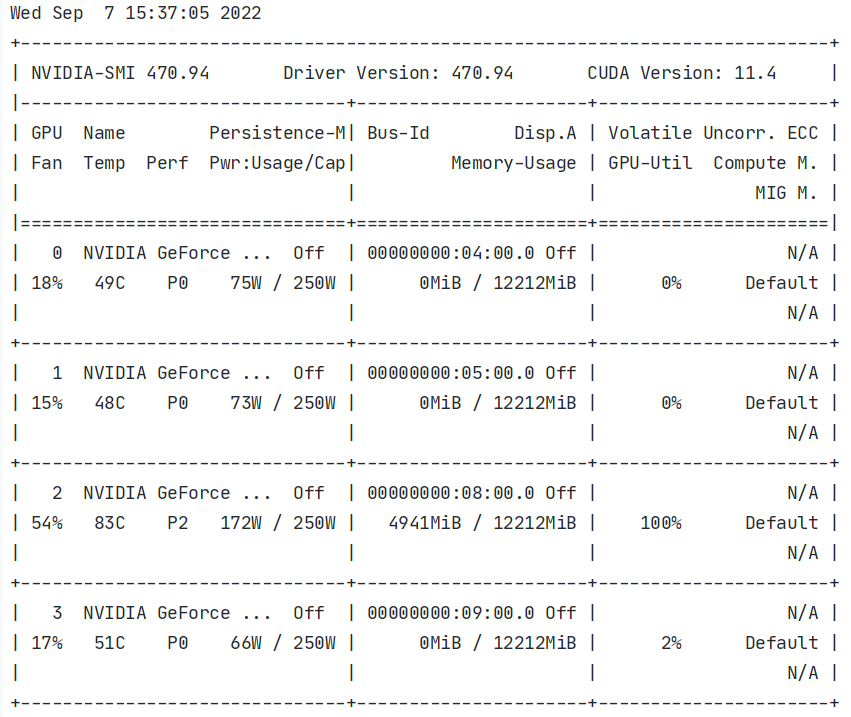

GPU:

I just set use_amp=True like this:

I just set use_amp=True like this:

model.fit(train_dataloader=train_dataloader, evaluator=evaluator, epochs=num_epochs, warmup_steps=warmup_steps, output_path=model_save_path, use_amp=True)

However, the speed of the training process is still the same as when I set use_amp=False. I don’t know the reason. Could you help me fix this problem?

Issue Analytics

- State:

- Created a year ago

- Comments:5 (2 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Cross-Encoders — Sentence-Transformers documentation

A Cross-Encoder does not produce a sentence embedding. ... Note, Cross-Encoder do not work on individual sentence, you have to pass sentence pairs....

Read more >Train With Mixed Precision - NVIDIA Documentation Center

Mixed precision is the combined use of different numerical precisions in a computational method. Half precision (also known as FP16) data ...

Read more >How To Fit a Bigger Model and Train It Faster - Hugging Face

This section gives brief ideas on how to make training faster and support bigger models ... While normally inference is done with fp16/amp...

Read more >Automatic Mixed Precision (AMP) Training

Mixed Precision Training (ICLR 2018). ... Why would FP16 training diverge? ... A: NVIDIA people have been working hard to port the idea...

Read more >Introducing native PyTorch automatic mixed precision for ...

Accuracy: AMP (FP16), FP32. The advantage of using AMP for Deep Learning training is that the models converge to the similar final accuracy ......

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

They don’t support fp16

Oh, thank you. That’s a little bad.