Troubleshooting Common Issues in Tiangolo FastAPI

Project Description

FastAPI is a modern, fast (high-performance), a web framework for building APIs with Python 3.7+ based on standard Python-type hints. It is built on top of Starlette and is one of the fastest Python web frameworks available. FastAPI is developed and maintained by Sebastián Ramírez, also known as “Tiangolo” on the web.

FastAPI is designed to be easy to use and learn, and it is especially suitable for building microservices and creating APIs for machine learning models. It is built on top of Starlette, which is a lightweight ASGI framework and makes use of the latest Python features such as async/await to provide high performance and fast response times. FastAPI also integrates well with other popular libraries and tools such as PostgreSQL, Redis, and Tortoise-ORM.

The framework is intuitive, with automatic validation of request and response data, and automatic documentation using OpenAPI and Swagger. It also has support for dependency injection, which can be used to create more modular and testable code.

Overall, FastAPI is a very powerful and flexible tool for building APIs with Python, and it is quickly gaining popularity in the Python web development community.

Troubleshooting Tiangolo FastAPI with the Lightrun Developer Observability Platform

Lightrun is a Developer Observability Platform, allowing developers to add telemetry to live applications in real-time, on-demand, and right from the IDE.

- Instantly add logs to, set metrics in, and take snapshots of live applications

- Insights delivered straight to your IDE or CLI

- Works where you do: dev, QA, staging, CI/CD, and production

Start for free today

The following issues are the most popular issues regarding this project:

How to use pydantic and sqlalchemy models with relationship

If you want to use pydantic and SQLAlchemy models with relationships in a FastAPI application, you can follow these steps:

- Define your pydantic models as usual, using the

BaseModelclass and the various field types provided by pydantic. You can also define relationships between models using theForeignKeyfield type. - Define your SQLAlchemy models as usual, using the

declarative_baseclass and the various column types provided by SQLAlchemy. You can also define relationships between models using therelationshipfunction. - Create a mapping between your pydantic models and SQLAlchemy models by defining a custom

__init__method for your SQLAlchemy models. In this method, you can initialize the SQLAlchemy model using the data from the corresponding pydantic model. - In your FastAPI application, create a function to handle HTTP requests and use the pydantic models to validate the request data. You can then use the SQLAlchemy ORM to query the database and return the result as a pydantic model.

- To create a new record in the database, create a pydantic model instance and pass it to the

createfunction of your SQLAlchemy model. This function will validate the data using the pydantic model, create a new SQLAlchemy model instance, and insert it into the database.

Here is an example of how you might use pydantic and SQLAlchemy with FastAPI:

from fastapi import FastAPI

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy import create_engine, Column, Integer, String, ForeignKey

from sqlalchemy.orm import sessionmaker, relationship

from pydantic import BaseModel, Field, ForeignKey as PydanticForeignKey

# Pydantic model

class User(BaseModel):

id: int

name: str

# SQLAlchemy model

class UserModel(Base):

__tablename__ = 'users'

id = Column(Integer, primary_key=True)

name = Column(String)

def __init__(self, user: User):

self.id = user.id

self.name = user.name

# Pydantic model with a foreign key

class Task(BaseModel):

id: int

user_id: PydanticForeignKey[User]

description: str

# SQLAlchemy model with a relationship

class TaskModel(Base):

__tablename__ = 'tasks'

id = Column(Integer, primary_key=True)

user_id = Column(Integer, ForeignKey('users.id'))

user = relationship('UserModel')

description = Column(String)

def __init__(self, task: Task):

self.id = task.id

self.user_id = task.user_id

self.description = task.description

app = FastAPI()

# Set up SQLAlchemy

Base.metadata.create_all(bind=engine)

Session = sessionmaker(bind=engine)

session = Session()

# Create a new task

@app.post("

How to gracefully stop FastAPI app ?

Utilizing gunicorn with a uvicorn worker is the best way to ensure success in production deployments of UVICORN. Consider consulting its official documentation for further guidance and insights.

https://www.uvicorn.org/deployment/#gunicorn

$ gunicorn -w 4 -k uvicorn.workers.UvicornWorker

As an individual, I choose to utilize a gunicorn configuration file in lieu of the command line. To keep up with recent advances, this document is linked to its latest release since 20.1.x has yet to be published on PyPI (Python Package Index).

https://docs.gunicorn.org/en/20.0.4/configure.html#configuration-file

multipart/form-data: Unable to parse complex types in a request form

Multipart/form-data is a commonly used type of data, however, it can cause errors if the Item class value does not come in as a string. I created an alternate approach for nested models to take input JSON solutions with added validation benefits. This helps get the job done when needing both file and complex form field support while also supporting multiple properties forms!

fastapi code:

class TestMetaConfig(BaseModel):

prop1: str

prop2: list[int]

class TestModel(BaseModel):

foo: str

bar: int

meta_config: TestMetaConfig

@app.post("/")

async def foo(upload_file: UploadFile = File(...), model: Json[TestModel] = Form(...)):

with open("test.png", "wb") as fh:

fh.write(await upload_file.read())

logger.info(f"model.foo: {model.foo}")

logger.info(f"model.bar: {model.bar}")

logger.info(f"model.meta_config: {model.meta_config}")

return {"foo": model.foo, "bar": model.bar}

By utilizing the json dumps function, calling it becomes all the more efficient.

import json

from pathlib import Path

import requests

HERE = Path(__file__).parent.absolute()

with open(HERE / "imgs/foo.png", "rb") as fh:

url = "https://localhost:8000/"

files = {"upload_file": fh}

values = {"foo": "hello", "bar": 123, "meta_config": {"prop1": "hello there", "prop2": ["general", "kenobi", 1]}}

resp = requests.post(url, files=files, data={"model": json.dumps(values)})

print(resp.status_code)

print(resp.json())Failure to properly validate data can lead to significant issues; by taking the time to analyze and make sure that any sent information is up-to-standard, one may avoid costly consequences.

{"detail": [{"loc": ["body", "model", "meta_config", "prop2", 0], "msg": "value is not a valid integer", "type": "type_error.integer"}, {"loc": ["body", "model", "meta_config", "prop2", 1], "msg": "value is not a valid integer", "type": "type_error.integer"}]}

FastAPI and Uvicorn is running synchronously and very slow

Sanic always reads in files completely to memory, whereas FastAPI via Starlette utilizes a SpooledTemporaryFile. This means data is written onto the disk after it passes 1MB, which can be overridden with an UploadFile.

from starlette.datastructures import UploadFile as StarletteUploadFile

# keep the SpooledTemporaryFile in-memory

StarletteUploadFile.spool_max_size = 0Optimized performance is expected with this configuration, likely similar to that of Sanic and Uvicorn. File I/O trips are now a thing of the past!

Python – FastAPI – Optional option for an UploadFile

With the range of options on hand, there is one that stands out as preferable – OptionOne. Unfortunately, a portion of OptionFive cannot be utilized due to its ineffectiveness for this particular task.

How to pass raw text in requests body

With a bit of creativity and effort, you can craft an inventive solution to the problem. Here is one example that may provide helpful inspiration.

from fastapi import FastAPI, Body, APIRouter, Request, Response

from typing import Callable

from fastapi.routing import APIRoute

from pydantic import BaseModel

import json

from ast import literal_eval

class CustomRoute(APIRoute):

def __init__(self, *args, **kwargs) -> None:

super().__init__(*args, **kwargs)

def get_route_handler(self) -> Callable:

original_route_handler = super().get_route_handler()

async def custom_route_handler(request: Request) -> Response:

request_body = await request.body()

request_body = literal_eval(request_body.decode("utf-8"))

request_body = json.dumps(request_body).encode("utf-8")

request._body = request_body # Note that we are overriding the incoming request's body

response = await original_route_handler(request)

return response

return custom_route_handler

app = FastAPI()

router = APIRouter(route_class=CustomRoute)

class TestModel(BaseModel):

name: str

@router.post("/")

async def dummy(model: TestModel = Body(...)):

return model

app.include_router(router)This body is deemed to be invalid

curl -X POST "https://127.0.0.1:8000/" -d "{ \"name\": \"st""ring\"}" But with a custom route, we can make it work

Out: {"name":"string"}

Gunicorn Workers Hangs And Consumes Memory Forever

After reviewing the source code for FastAPI and testing it myself, I can confidently say that this issue is not a memory leak. However, if your machine contains an abundance of CPUs – then they will collectively occupy ample amounts of memory. The distinction between this circumstance and others lies within startlette’s routing methodrequest_response().

async def run_endpoint_function(

*, dependant: Dependant, values: Dict[str, Any], is_coroutine: bool

) -> Any:

# Only called by get_request_handler. Has been split into its own function to

# facilitate profiling endpoints, since inner functions are harder to profile.

assert dependant.call is not None, "dependant.call must be a function"

if is_coroutine:

return await dependant.call(**values)

else:

return await run_in_threadpool(dependant.call, **values)

async def run_in_threadpool(

func: typing.Callable[..., T], *args: typing.Any, **kwargs: typing.Any

) -> T:

loop = asyncio.get_event_loop()

if contextvars is not None: # pragma: no cover

# Ensure we run in the same context

child = functools.partial(func, *args, **kwargs)

context = contextvars.copy_context()

func = context.run

args = (child,)

elif kwargs: # pragma: no cover

# loop.run_in_executor doesn't accept 'kwargs', so bind them in here

func = functools.partial(func, **kwargs)

return await loop.run_in_executor(None, func, *args)The asynchronous interface of your rest application should be run using loop.run_in_executor, and the default thread pool size is determined by the number of available CPUs – in this case, 40 on a test machine yields 200 threads for use. Objects utilized after each request are not released from memory due to potential reuse with subsequent requests; though significant RAM usage can occur as a result, it does not lead to any type of leak or loss associated with undeclared variables or parameters. Test code can easily reproduce these results if desired.

import asyncio

import cv2 as cv

import gc

from pympler import tracker

from concurrent import futures

# you can change worker number here

executor = futures.ThreadPoolExecutor(max_workers=1)

memory_tracker = tracker.SummaryTracker()

def mm():

img = cv.imread("cap.jpg", 0)

detector = cv.AKAZE_create()

kpts, desc = detector.detectAndCompute(img, None)

gc.collect()

memory_tracker.print_diff()

return None

async def main():

while True:

loop = asyncio.get_event_loop()

await loop.run_in_executor(executor, mm)

if __name__=='__main__':

loop = asyncio.get_event_loop()

loop.run_until_complete(main())Despite not being a memory leak, I believe the implementation of fastAPI is still lacking since it heavily relies on CPU count. When running large deep-learning models with this software, an excess amount of RAM can be consumed quickly and easily. As such, making thread pool size configurable could prove to be beneficial in improving performance or simply reducing strain during operation.

If you would like further information regarding my discoveries when reading through the source code please refer to my blog at https://www.jianshu.com/p/e4595c48d091 – even though currently only written in Chinese!

Python 3.9 has implemented a thread-limiting strategy in its thread pool – ensuring optimal utilization of computing resources and generating highly effective results.

if max_workers is None:

# ThreadPoolExecutor is often used to:

# * CPU bound task which releases GIL

# * I/O bound task (which releases GIL, of course)

#

# We use cpu_count + 4 for both types of tasks.

# But we limit it to 32 to avoid consuming surprisingly large resource

# on many core machine.

max_workers = min(32, (os.cpu_count() or 1) + 4)

if max_workers <= 0:

raise ValueError("max_workers must be greater than 0")Utilizing the power of Python 3.9, you can upgrade to ensure that your program is running with maximum efficiency. If threaded requests are not too thick in volume, consider using asynchronous programming techniques – while throughput might suffer as a consequence, it could be worth considering if performance needs optimizing!

FastAPI+Uvicorn is running slow than Flask+uWSGI

Fascinated by the range of commentaries on this query, I decided to conduct my own experiment and analyze the results.

FastAPI + async def + uvicorn

from fastapi import FastAPI

app = FastAPI(debug=False)

@app.get("/")

async def run():

return {"message": "hello"}run command: uvicorn --log-level error --workers 4 fastapi_test:app > /dev/null 2>&1

Requests per second: 12160.04 [#/sec] (mean) Time per request: 41.118 [ms] (mean) Time per request: 0.082 [ms] (mean, across all concurrent requests) Transfer rate: 1935.63 [Kbytes/sec] received

Flask + gunicorn

import flask

app = flask.Flask(__name__)

@app.route("/")

def run():

return {"message": "hello"}run command: gunicorn --log-level error -w 4 flask_test:app > /dev/null 2>&1

Requests per second: 15726.21 [#/sec] (mean) Time per request: 31.794 [ms] (mean) Time per request: 0.064 [ms] (mean, across all concurrent requests) Transfer rate: 2641.51 [Kbytes/sec] received

These first two tests show the same results

FastAPI + async def + gunicorn with uvicorn workers

from fastapi import FastAPI

app = FastAPI(debug=False)

@app.get("/")

async def run():

return {"message": "hello"}run command: gunicorn --log-level error -w 4 -k uvicorn.workers.UvicornWorker fastapi_test:app > /dev/null 2>&1

Requests per second: 34781.40 [#/sec] (mean) Time per request: 14.376 [ms] (mean) Time per request: 0.029 [ms] (mean, across all concurrent requests) Transfer rate: 4891.13 [Kbytes/sec] received

This is nearly 3x the performance of test 1.

FastAPI + def + uvicorn

from fastapi import FastAPI

app = FastAPI(debug=False)

@app.get("/")

def run():

return {"message": "hello"}run command: uvicorn --log-level error --workers 4 fastapi_test:app > /dev/null 2>&1

Requests per second: 19752.03 [#/sec] (mean) Time per request: 25.314 [ms] (mean) Time per request: 0.051 [ms] (mean, across all concurrent requests) Transfer rate: 2777.63 [Kbytes/sec] received

By converting async def to def, FastAPI is able to provide faster performance than Flask.

FastAPI + def + gunicorn with uvicorn workers

from fastapi import FastAPI

app = FastAPI(debug=False)

@app.get("/")

def run():

return {"message": "hello"}run command: gunicorn --log-level error -w 4 -k uvicorn.workers.UvicornWorker fastapi_test:app > /dev/null 2>&1

Requests per second: 20315.62 [#/sec] (mean) Time per request: 24.612 [ms] (mean) Time per request: 0.049 [ms] (mean, across all concurrent requests) Transfer rate: 2856.88 [Kbytes/sec] received

So, in conclusion, for a function that can be defined as both async and sync, the performance rank is:

- FastAPI + async def + gunicorn with uvicorn workers

- FastAPI + def + gunicorn with uvicorn workers

- FastAPI + def + uvicorn

- Flask + gunicorn

- FastAPI + async def + uvicorn

404 Error with OpenAPI

To temporarily rectify the issue, one could implement a monkey patch to the function responsible for generating HTML Swagger documentation. This will help ensure that an earlier version of Swagger is used instead

from fastapi import applications

from fastapi.openapi.docs import get_swagger_ui_html

def swagger_monkey_patch(*args, **kwargs):

"""

Wrap the function which is generating the HTML for the /docs endpoint and

overwrite the default values for the swagger js and css.

"""

return get_swagger_ui_html(

*args, **kwargs,

swagger_js_url="https://cdn.jsdelivr.net/npm/swagger-ui-dist@3.29/swagger-ui-bundle.js",

swagger_css_url="https://cdn.jsdelivr.net/npm/swagger-ui-dist@3.29/swagger-ui.css")

# Actual monkey patch

applications.get_swagger_ui_html = swagger_monkey_patch

# Your normal code ...

app = FastAPI()

422 unprocessable entity fastapi when trying to call api to get image

If your response has a status code of 422, you will receive an informative body containing the error message. Additionally, ensure that requests are being sent from the right source for expected results. An example is included to guide successful implementation:

import requests

url = "https://localhost/faces/identify"

files = {'file_upload': open('test.jpg', 'rb')}

requests.post(url, files=files)

To successfully complete your task, you must make a POST request using multipart/form-data as the content type. This will allow for the efficient transmission of data to and from its respective sources.

Persisting memory state between requests in FastAPI

Non-statelessness in a python process is normal, and cannot be avoided. It’s simply an implementation issue rather than any fault with the web framework or server itself. While setting uvicorn to one worker per request could reduce this problem, it may result in slower speeds – meaning no simple solution exists for making static classes persist over multiple requests; however, understanding that coding non-stateless does not constitute as a security concern should ease fears of vulnerability around this matter.

Use `gunicorn` instead `uvicorn` in main

The updated deployment documentation for FastAPI Server Workers can be accessed at https://fastapi.tiangolo.com/deployment/server-workers/. Get started now with all the most up-to-date information available.

How to set request headers before path operation is executed

from fastapi import FastAPI

app = FastAPI()

class Middleware:

def __init__(self, app):

self.app = app

async def __call__(self, scope, receive, send):

assert scope["type"] == "http"

headers = dict(scope["headers"])

headers[b"x-request-id"] = b'1' # generate the way you want

scope["headers"] = [(k, v) for k, v in headers.items()]

await self.app(scope, receive, send)

app.add_middleware(Middleware)

@app.get("/")

def home():

return "Hello World!"To explore even more about the Starlette Context, it is worth a visit to https://pypi.org/project/starlette-context/ for an in-depth look at all its features and applications.

Depends object has no attribute

It was a pleasant surprise to find an answer that precisely addressed my desires. I now feel obligated to use this newfound knowledge in helping someone else, however it may require more research on my part before I can do so. Nonetheless, while the solution of Security and Scopes is most likely what must be done here, I am determined to seek out all possibilities first – even if there are some pearls missing from the documentation necklace along the way!

A crucial question arises – does it work? The answer is, surprisingly: nearly! If a little bit of JavaScriptese may be excused here, when you run Depends(check_role(“admin”)), instead of passing it a function directly, what’s actually being passed through is something akin to a promise. This creates an error as check_role itself runs not immediately but in the async mode..recognizing this detail and removing the async from check_role resolves this issue without fail.

def check_role(role: str):

async def is_users_role(current_user = Depends(get_current_user)):

print(f"[check_role] current_user: {current_user}")

roles = [role.name for role in current_user.roles]

return role in roles

return is_users_role

I took the extra step of transferring async to is_users_role for optimal results – a hint that shows attention to detail and dedication. By following this rule, you can avoid having it in higher-order functions; such an action goes against its definition within the said mechanism. I feel confident my advice will be just as beneficial for your needs as yours was for mine!

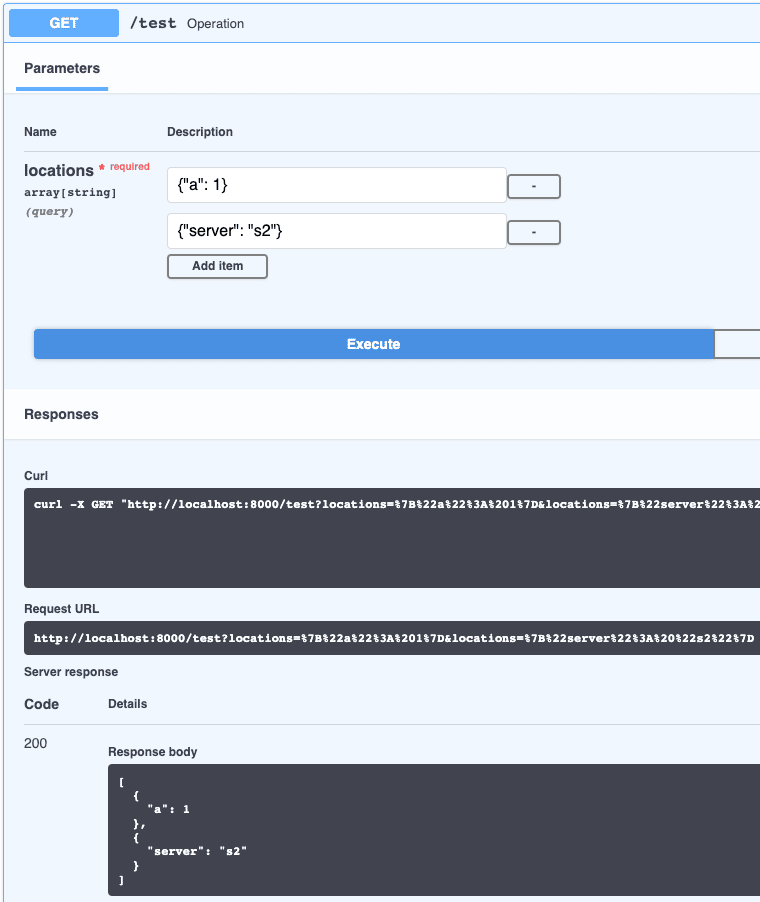

Query parameters – Dictionary support

To realize your goal, Depends may be the ideal tool.

import json

from typing import List

from fastapi import FastAPI, Query, Depends

app = FastAPI(debug=True)

def location_dict(locations: List[str] = Query(...)):

return list(map(json.loads, locations))

@app.get("/test")

def operation(locations: list = Depends(location_dict)):

return locations

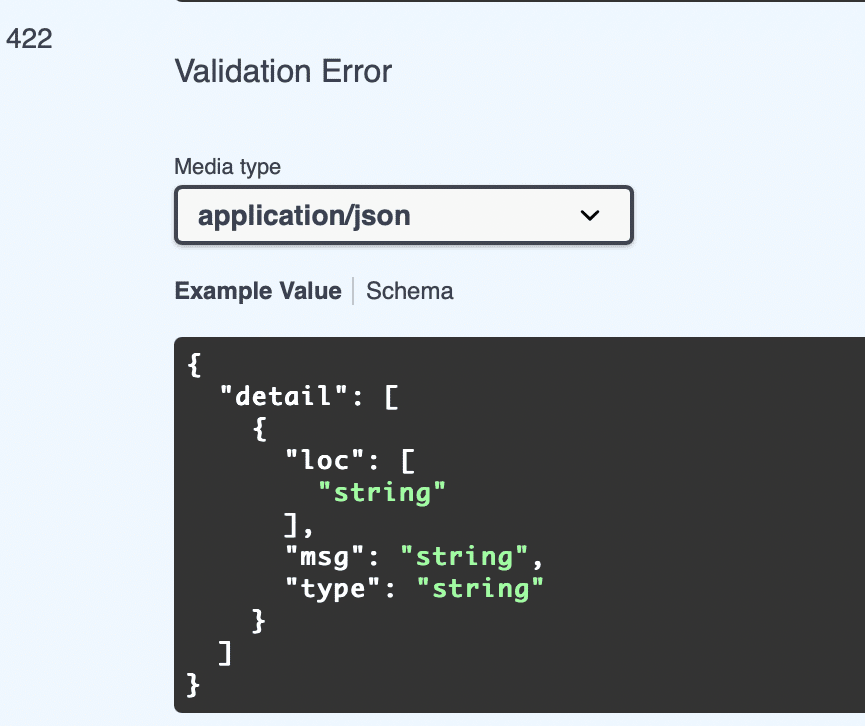

Allow customization of validation error

In the event that your errors adhere to a set structure, consider taking advantage of the custom handler noted in https://fastapi.tiangolo.com/tutorial/handling-errors/#override-request-validation-exceptions for improved accuracy and efficiency in managing these scenarios. As demonstrated by an example server taken from applicable documentation, one could easily override their default schema located within openapi/utils with this integrated mechanism.

from typing import Optional

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

def read_root():

return {"Hello": "World"}

@app.get("/items/{item_id}")

def read_item(item_id: int, q: Optional[str] = None):

return {"item_id": item_id, "q": q}It will present the default schema il https://localhost:8000/docs:

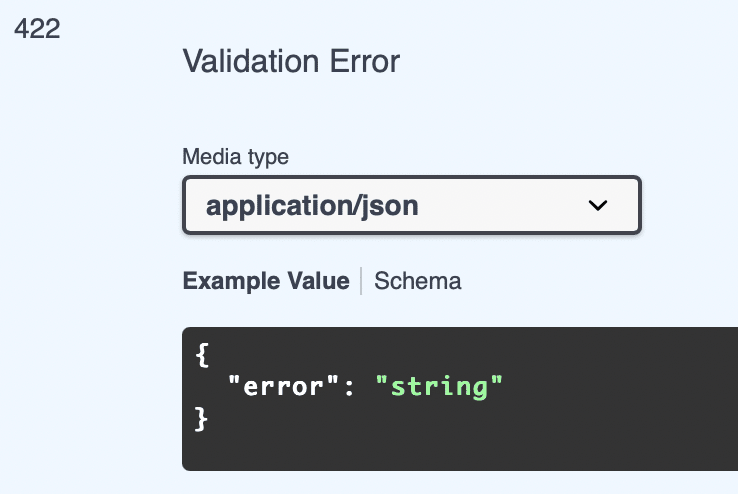

By overwriting the validation_error_response_definition in fastapi.openapi.utils, one can alter OpenAPI’s preset schema to adjust how an application responds and behaves with certain situations encountered by users:

from typing import Optional

from fastapi import FastAPI

# import the module we want to modify

import fastapi.openapi.utils as fu

app = FastAPI()

# and override the schema

fu.validation_error_response_definition = {

"title": "HTTPValidationError",

"type": "object",

"properties": {

"error": {"title": "Message", "type": "string"},

},

}

@app.get("/")

def read_root():

return {"Hello": "World"}

@app.get("/items/{item_id}")

def read_item(item_id: int, q: Optional[str] = None):

return {"item_id": item_id, "q": q}The result is:

Validation in the FastAPI response handler is a lot heavier than expected

Having weighed the pros and cons of FastAPI’s response handling, validation, and serialization in discussions here as well as other considerations, I have decided to forego these features. Taking a closer look at our application code revealed that we are already working with pydantic models which inherently contain their own validations – eliminating any need for additional performance hits. Furthermore, Pydantic’s .json() utility offers enhanced support when compared to FastAPI’s jsonable_encoder including feasible ways such as ujson or orjson libraries along with custom encoders via a specialized BaseModel class:

import orjson

from pydantic import BaseModel as PydanticBaseModel

from bson import ObjectId

def orjson_dumps(v, *, default):

# orjson.dumps returns bytes, to match standard json.dumps we need to decode

return orjson.dumps(v, default=default, option=orjson.OPT_NON_STR_KEYS).decode()

class BaseModel(PydanticBaseModel):

class Config:

json_load = orjson.loads

json_dumps = orjson_dumps

json_encoders = {ObjectId: lambda x: str(x)}

By utilizing a custom FastAPI response class, we gain the advantage of returning either our Pydantic model or an already serialized one. This grants us greater control over items such as alias, exclude, and include functions. Moreover, it makes data flow more explicit to better illustrate concepts for other developers unfamiliar with the codebase.

from typing import Any

from fastapi.response import JSONResponse

from pydantic import BaseModel

class PydanticJSONResponse(JSONResponse):

def render(self, content: Any) -> bytes:

if content is None:

return b""

if isinstance(content, bytes):

return content

if isinstance(content, BaseModel):

return content.json(by_alias=True).encode(self.charset)

return content.encode(self.charset)Full example:

from typing import Any, List, Optional

import orjson

from fastapi import FastAPI, status

from fastapi.response import JSONResponse

from pydantic import BaseModel as PydanticBaseModel

from bson import ObjectId

def orjson_dumps(v, *, default):

# orjson.dumps returns bytes, to match standard json.dumps we need to decode

return orjson.dumps(v, default=default, option=orjson.OPT_NON_STR_KEYS).decode()

class BaseModel(PydanticBaseModel):

class Config:

json_load = orjson.loads

json_dumps = orjson_dumps

json_encoders = {ObjectId: lambda x: str(x)}

class Item(BaseModel):

name: str

description: Optional[str] = None

price: float

tax: Optional[float] = None

tags: List[str] = []

class PydanticJSONResponse(JSONResponse):

def render(self, content: Any) -> bytes:

if content is None:

return b""

if isinstance(content, bytes):

return content

if isinstance(content, BaseModel):

reutrn content.json(by_alias=True).encode(self.charset)

return content.encode(self.charset)

app = FastAPI()

@app.post("/items/", status_code=status.HTTP_200_OK, response_model=Item)

async def create_item():

item = Item(name="Foo", price=50.2)

return PydanticJSONResponse(content=item)

How to test against UploadFile parameter

_test_upload_file = Path('/usr/src/app/tests/files', 'new-index.json')

_files = {'upload_file': _test_upload_file.open('rb')}

with TestClient(app) as client:

response = client.post('/_config',

files=_files)

assert response.status_code == HTTPStatus.CREATED

# remove the test file from the config directory

_copied_file = Path('/usr/src/app/config', 'new-index.json')

_copied_file.unlink()Previously, the endpoint parameter upload_file was incorrectly assigned to the test name files. It is essential that this matches in order for it to be successful – hence why the initial attempt did not work.

Return 404 without using exceptions

While accessing your URL, an unexpected wrong page was encountered. Nevertheless, the response status code still returned a successful 200 with a 404 (int) inside its body. A possible solution:

from fastapi import FastAPI, status

from fastapi.responses import JSONResponse

app = FastAPI()

@app.get(“/”)

async def root(error: bool):

if error:

return JSONResponse(status_code=404)

In cases of query argument errors, our endpoint will return a response with status code 404; however, the default is set to 200. Utilizing JSONResponse would be an optimal choice here – nonetheless, if we were writing FastAPI Patterns book this could quite possibly be considered as an anti-pattern!

How to hide `Schemas` section in `/docs`

With the latest update to FastAPI, it is now possible to pass swagger parameters for added security – allowing users to easily conceal and protect their schemas from unwanted onlookers.

app = FastAPI(

title="API",

swagger_ui_parameters={"defaultModelsExpandDepth": -1}

)

What is the best tool or ORM to manage database in Fast API?

To maximize the performance of your FastAPI application, we recommend SqlModel and SQLAlchemy Core. These official ORM tools provide an optimal development experience to make sure you get the most out of your project!

First-class session support in FastAPI

Our security tool is already in place and seamlessly integrated with OpenAPI, as well as compatible with other components. Although not properly documented at this time, what’s even more impressive is that it works efficiently:

from fastapi import FastAPI, Form, HTTPException, Depends

from fastapi.security import APIKeyCookie

from starlette.responses import Response, HTMLResponse

from starlette import status

from jose import jwt

app = FastAPI()

cookie_sec = APIKeyCookie(name="session")

secret_key = "someactualsecret"

users = {"dmontagu": {"password": "secret1"}, "tiangolo": {"password": "secret2"}}

def get_current_user(session: str = Depends(cookie_sec)):

try:

payload = jwt.decode(session, secret_key)

user = users[payload["sub"]]

return user

except Exception:

raise HTTPException(

status_code=status.HTTP_403_FORBIDDEN, detail="Invalid authentication"

)

@app.get("/login")

def login_page():

return HTMLResponse(

"""

<form action="/login" method="post">

Username: <input type="text" name="username" required>

<br>

Password: <input type="password" name="password" required>

<input type="submit" value="Login">

</form>

"""

)

@app.post("/login")

def login(response: Response, username: str = Form(...), password: str = Form(...)):

if username not in users:

raise HTTPException(

status_code=status.HTTP_403_FORBIDDEN, detail="Invalid user or password"

)

db_password = users[username]["password"]

if not password == db_password:

raise HTTPException(

status_code=status.HTTP_403_FORBIDDEN, detail="Invalid user or password"

)

token = jwt.encode({"sub": username}, secret_key)

response.set_cookie("session", token)

return {"ok": True}

@app.get("/private")

def read_private(username: str = Depends(get_current_user)):

return {"username": username, "private": "get some private data"}

List as query param

This is an effective solution:

from typing import List

from fastapi import FastAPI, Query

app = FastAPI()

@app.get("/test")

async def test(query: List[int] = Query(...)) -> List[int]:

return query

More issues from Tiangolo repos

Troubleshooting uvicorn-gunicorn-fastapi-docker | Troubleshooting full-stack-fastapi-postgresql | Troubleshooting uwsgi-nginx-flask-docker

It’s Really not that Complicated.

You can actually understand what’s going on inside your live applications.